Alpha transparency effect

In various real-time audio and video interaction scenarios, segmenting the host's background and applying transparent special effects can make the interaction more engaging, enhance immersion, and improve the overall interactive experience.

Consider the following sample scenarios:

-

Broadcaster background replacement: The audience sees the broadcaster's background in the video replaced with a virtual scene, such as a gaming environment, a conference, or a tourist attractions.

-

Animated virtual gifts: Display dynamic animations with a transparent background to avoid obscuring live content when multiple video streams are merged.

-

Chroma keying during live game streaming: The audience sees the broadcaster's image cropped and positioned within the local game screen, making it appear as though the broadcaster is part of the game.

Prerequisites

Ensure that you have implemented the SDK quickstart in your project.

Implement alpha transparency

Choose one of the following methods to implement the Alpha transparency effect based on your specific business scenario.

Custom video capture scenario

The implementation process for this scenario is illustrated in the figure below:

Take the following steps to implement this logic:

-

Process the captured video frames and generate Alpha data. You can choose from the following methods:

- Method 1: Call the

pushExternalVideoFrame[2/2] method and set thealphaBufferparameter to specify Alpha channel data for the video frames. This data matches the size of the video frames, with each pixel value ranging from 0 to 255, where 0 represents the background and 255 represents the foreground.

cautionEnsure that

alphaBufferis exactly the same size as the video frame (width × height), otherwise the app may crash.-

Method 2: Call the

pushExternalVideoFramemethod and use thesetAlphaStitchModemethod in theVideoFrameclass to set the Alpha stitching mode. Construct aVideoFramewith the stitched Alpha data.

- Method 1: Call the

-

Render the local view and implement the Alpha transparency effect.

- Create a

TextureViewobject for rendering the local view. - Call the

setupLocalVideomethod to set the local view:-

Set the

enableAlphaMaskparameter totrueto enable alpha mask rendering. -

Set

TextureViewas the display window for the local video.

-

- Create a

-

Render the local view of the remote video stream and implement alpha transparency effects.

-

After receiving the

onUserJoinedcallback, aTextureViewobject is created for rendering the remote view. -

Call the

setupRemoteVideomethod to set the remote view:- Set the

enableAlphaMaskparameter to true to enable alpha mask rendering. - Set the display window of the remote video stream in the local video.

- Set the

-

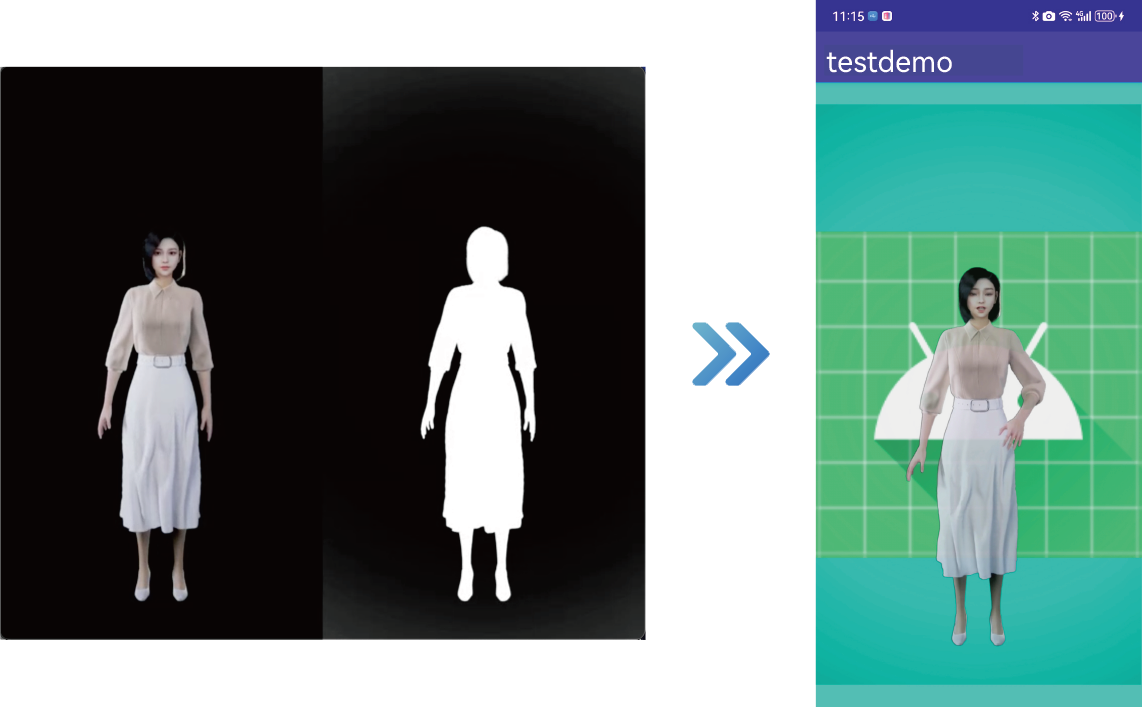

SDK Capture Scenario

The implementation process for this scenario is illustrated in the following figure:

Take the following steps to implement this logic:

-

On the broadcasting end, call the

enableVirtualBackground[2/2] method to enable the background segmentation algorithm and obtain the Alpha data for the portrait area. Set the parameters as follows:enabled: Set totrueto enable the virtual background.backgroundSourceType: Set toBACKGROUND_NONE(0), to segment the portrait and background, and process the background as Alpha data.

-

Call

setVideoEncoderConfigurationon the broadcasting end to set the video encoding property and setencodeAlphatotrue. Then the Alpha data will be encoded and sent to the remote end. -

Render the local and remote views and implement the Alpha transparency effect. See the steps in the Custom Video Capture Scenario for details.

Raw video data scenario

The implementation process for this scenario is illustrated in the following figure:

Take the following steps to implement this logic:

-

Call the

registerVideoFrameObservermethod to register a raw video frame observer and implement the corresponding callbacks as required. -

Use the

onCaptureVideoFramecallback to obtain the captured video data and pre-process it as needed. You can modify the Alpha data or directly add Alpha data. -

Use the

onPreEncodeVideoFramecallback to obtain the local video data before encoding, and modify or directly add Alpha data as needed. -

Use the

onRenderVideoFramecallback to obtain the remote video data before rendering it locally. Modify the Alpha data, add Alpha data directly, or render the video image yourself based on the obtained Alpha data.

Development notes

- Implement the transparency properties of the app window yourself and handle the transparency relationship when multiple windows are stacked on top of each other (achieved by adjusting the

zOrderof the window).

- On Android, only

TextureViewandSurfaceVieware supported when setting the view due to system limitations.

Reference

This section contains content that completes the information on this page, or points you to documentation that explains other aspects to this product.