MetaKit

The MetaKit extension is an innovative product designed to enhance interactive video experiences. By integrating multiple advanced AI technologies, it provides users with creative and personalized video enhancement functions.

MetaKit can add rich video interactive effects, allowing you to choose flexibly, according to your specific requirements:

- Social entertainment: Enhance social entertainment and live broadcasts with features like Animoji and portrait edge flames, providing more creativity and personalization for hosts.

- Online education: Create a more vivid and engaging teaching environment with 360-degree backgrounds to enhance students' interest in learning.

- Online conferences: Use 3D lighting to create a presentation environment comparable to professional effects, enhancing the visual impact of your presentations.

Understand the tech

The MetaKit extension includes the following key functions:

| Function | Description |

|---|---|

| Virtual human | Easily generate virtual characters and create unique virtual images with custom options like face pinching and fashion dressup. Capture user expressions in real time and render them back on the virtual image to enhance interaction. |

| Animoji | Apply various Animoji effects to portraits in real time using AR and face capture technology. Show real-time changes in head dynamics and expressions to display a unique personality. |

| Lighting | Provide users with precise and efficient light and shadow effects, including 3D light (one light with customizable motion trajectory), atmosphere light (simulating multiple real light effects with fixed motion trajectory), advertising light, and other modes. Intelligent light and shadow control allows users to experience more realistic effects in a virtual environment. |

| Atmospheric effects | Create an artistic atmosphere using lighting effects, including portrait edge flames, aurora, ripples, and other modes. |

| 360 Background | Provide users with customized panoramic virtual background effects. |

The MetaKit extension offers an open art ecosystem, supporting one-click import of Animoji and avatar images created according to Agora art standards. This provides users with more flexible creation and integration options.

To use this feature, contact technical support.

The effects of some functions are as follows:

- Virtual human (girl)

- Animoji (dog)

- Atmospheric effects (portrait edge flames)

- Lighting (ambient light)

- Lighting (3D light)

This page explains how to integrate MetaKit extension into your project to utilize the virtual human, Animoji, lighting effects, and 360 background functions.

Prerequisites

To follow this procedure, you must have:

- Integrated the v4.2.x or v4.3.x of the Video SDK and implemented basic real-time audio and video functions in your app. See SDK quickstart.

info

- When integrating through Maven Central, specify

io.agora.rtc:full-sdk:x.y.zand replacex.y.zwith the specific SDK version number. - The MetaKit extension uses the Face Capture extension (

libagora_face_capture_extension.so) and the Virtual Background extension (libagora_segmentation_extension.so). You can delete unnecessary extensions as needed to reduce the size of the app.

- When integrating through Maven Central, specify

-

Android Studio v4.2 or above.

-

An Android device model produced in 2019 or later, to ensure that the front camera and microphone are functioning properly.

- A computer that can access the Internet. If your network environment has a firewall deployed, refer to Firewall requirements to use the Agora services normally.

Project setup

To implement MetaKit effects in your app, open the SDK quickstart for Video Calling project and take the steps described below.

Integrate the extension

To integrate the MetaKit extension, take the following steps:

-

Download and unzip the MetaKit Android extension.

-

Open the folder and copy the

/sdkfiles in the path to the corresponding project path.Library Function Integration path AgoraMetaKit.aarRendering runtime layer /app/libsmetakit.jarWrapper layer Java package /app/libslibagora_metakit_extension.soWrapper layer /app/src/main/jniLibs/arm64-v8aor/app/src/main/jniLibs/armeabi-v7a -

In the project's

/appdirectory, add dependencies for all.jarand.aarfiles located under thelibspath in the dependencies section of thebuild.gradlefile.

Configure MetaKit

To configure the extension, take the following steps:

-

Open the folder of the MetaKit extension for Android. The

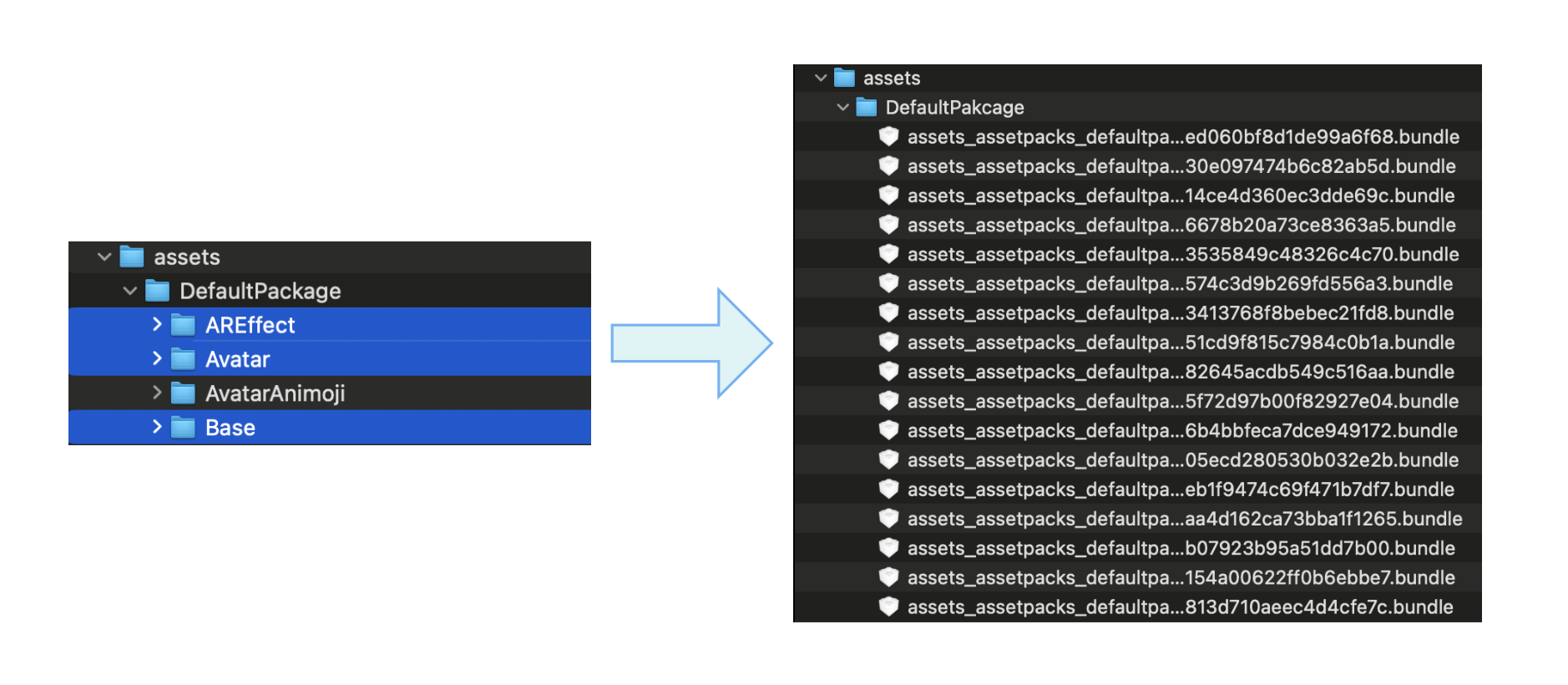

/assets/DefaultPackagepath contains the Bundle file resources required for different scenes and functions. The table below lists the resource name, purpose, and size:Name Required/Optional Usage Size Base Required Basic scene resources. Each functional module is built on this scene resource and includes related resources that support the hot update function. 2.38 MB Avatar Function-specific Virtual human model subpackage resources, including virtual human images such as girlandhuamulan. Supports face capture, face pinching, and dress-up capabilities.girl: 14.8 MBhuamulan: 3.2 MB (does not support face pinching and dress-up)AvatarAnimoji Function-specific Animoji model subpackage resources, including Animoji images such as dog,girlhead, andarkit. Supports face capture.dog: 1.4 MBgirlhead: 954 KBarkit: 44 KBAREffect Function-specific Lighting effects and 360 background subpackage resources, including 3D lighting, atmosphere lighting, advertising lighting, screen ripples, aurora effects, portrait edge flames, and other effects. 3.97 MB -

Combine the basic resources (

Base) and the subpackage resources (Avatar,AvatarAnimoji, andAREffect) of specific functional modules into a complete resource package to experience the corresponding functional module. The functional modules and their corresponding resource package combinations are shown in the following table:Functional module Resource package combination Virtual human Base+AvatarAnimoji Base+AvatarAnimojiLighting effects Base+AREffect360 Background Base+AREffect -

To experience the virtual human and 360 background features, combine the

Base,Avatar, andAREffectresources into a single directory, as shown below. After preparing the resource directory, place it in the SD card directory of the mobile device, such as/sdcard/metaAssets/15. When loading scene resources, set the absolute path of the resource directory to MetaKit.

Handle Android permissions

To request the required permissions, take the following steps:

-

Navigate to the project's

/app/src/maindirectory and add the following permissions to theAndroidManifest.xmlfile:The MetaKit extension primarily uses the following Android system permissions:

| Permissions | Function | Description | |:-------------------------|:----------|:------| |

CAMERA| Access your phone's camera. | Functions such as expression driving and background segmentation require access to the camera for AI reasoning.| |INTERNET| Access the network. | Authorize the AI module when the extension is enabled.| |READ_EXTERNAL_STORAGE| Read external storage. | Read the Bundle resource file from the SD card.| |WRITE_EXTERNAL_STORAGE| Write to external storage. | Record SDK-related log files. | -

Android 6.0 and later versions enforce stricter permission management. Besides declaring permissions statically in

AndroidManifest.xml, certain permissions must also be requested dynamically within the application's business logic. Here's an example of how this can be implemented:

Select architecture

The MetaKit extension currently supports the arm64-v8a and armeabi-v7a architectures. To optimize the app size, it's advisable to select only the necessary architecture during integration. Here's an example of how this can be implemented:

Implement the logic

Once the project configuration is complete, follow these steps to explore the various functional modules of the MetaKit extension:

Listen to extension events

When calling createInitialize on RtcEngine, ensure the following configurations are performed in RtcEngineConfig:

-

Call

addExtensionwithAgoraFaceCapturePlugin(agora_face_capture_extension) andMetaKitPlugin(agora_metakit_extension). Then, implement the event callback interfaceIMediaExtensionObserverfor extensions and register it foronEventextension event callbacks. -

In the callback, specify

providerasagora_video_filters_metakitandextensionasmetakitto filter events from the MetaKit extension. TheonEventevent transmits engine status events transparently, such asunityLoadFinish(Unity environment loading completed) andloadSceneResp(scene resource loading completed).

Enable extensions

Before enabling the MetaKit extension, ensure that both the Facial Capture extension and the Virtual Background extension are enabled.

Enable the Face Capture extension

To enable the Face Capture extension, follow these steps:

-

Call

registerExtensionandenableExtensionwith the provider nameagora_video_filters_face_captureand the extension nameface_capture. -

Call

setExtensionPropertyto authenticate and authorize the extension. Useauthentication_informationas the key, and a value containing the company name (company_id) and the face capture certificate (license).InfoContact Agora to obtain the company name and certificate.

Enable the Virtual Background extension

To enable the Virtual Background extension, take the following steps:

-

Call

setParametersto set"rtc.video.seg_before_exts"totrue: -

Call

enableVirtualBackgroundwith the following configurations:- Set

backgroundSourceTypeto0to process the background into alpha information, separating the portrait from the background. - Set

modelTypeto1to select background processing suitable for all scenes.

- Set

Enable the MetaKit extension

To enable the MetaKit extension, follow these steps:

-

Call

registerExtensionwith the service provider nameagora_video_filters_metakitand the extension namemetakit. -

Call

enableExtensionwith the same service provider name and extension name.

Initialize MetaKit

-

To set the Android activity context for starting the rendering engine, call

setExtensionPropertywith the following parameters:key:setActivityContextvalue: The activity context address

-

To initialize the MetaKit extension, call

setExtensionPropertywith the following parameters:key: initializevalue: an empty string

Load scene resources

-

When the

onEventcallback captures theunityLoadFinishevent, it indicates that the environment has been loaded. At this point, you can callsetExtensionPropertyto load the MetaKit scene resources. Use the following parameters:key:loadScenevalue: A string containing relevant information about the scene resources

-

When the

onEventcallback captures theloadSceneRespevent, it indicates that the scene resources have been loaded. You can then follow these steps to experience the virtual human, Animoji, lighting effects, and 360 background modules.

Use the avatar effect

-

Call

setExtensionPropertyto request texture and render the virtual human scene. SetkeytorequestTextureandvalueto include the scene configuration information. To experience the virtual human feature, setavatarModeto0for the virtual human scene mode and specify the avatar as your desired virtual human image, such as girl or huamulan.NoteIn addition to the default avatars,

girlandhuamulan, the Agora MetaKit extension offers an open artistic ecosystem. It supports one-click import of virtual human models created according to Agora's art standards, providing users with more flexible creation and integration options. Contact Agora technical support to use this feature.After the scene rendering is complete, a Blendshape-driven virtual human image will be displayed, capturing your facial expressions and making corresponding facial changes, following your head movements.

-

Call

setExtensionPropertyto perform face pinching operations on the virtual human. SetkeytoupdateFaceand value to support passing multiple sets of resource IDs for face pinching parts and their corresponding adjustment ranges. See face pinching for details. -

Call

setExtensionPropertyto perform dress-up operations on the virtual human. SetkeytoupdateDressand value to support passing an array of integers containing multiple resource IDs for dressing parts. See dressing resources for details.Additionally, MetaKit supports switching the virtual human's appearance and perspective. For more details, refer to the virtual human key-value description.

Use the Animoji effect

Call setExtensionProperty to request the texture and render the Animoji scene. Set key to requestTexture, which includes the scene configuration information. To experience the Animoji function, set avatarMode to 1 for Animoji scene mode. Specify avatar to the Animoji image you want to use, such as dog, girl, or headarkit.

In addition to the already available Animoji images (dog, girl, headarkit), the Agora MetaKit extension provides an open art ecosystem. It supports one-click import of Animoji images created according to Agora's art standards, offering users more flexible creation and integration options. Contact Agora technical support to use this feature.

Use the sticker effect

Call setExtensionProperty to request the texture and render the sticker scene. Set key to loadMaterial and value to the material configuration. Specify the corresponding resource name depending on the sticker that you want to use. For example, material_sticker_glass for glasses.

In addition to the already available stickers veil, glass, facemask, and dragonhat, the Agora MetaKit extension provides an open art ecosystem and supports one-click import of sticker images created according to Agora's art standards. This offers users more flexible creation and integration options. Contact Agora technical support to use this feature.

When the onEvent callback captures the materialLoaded event, it means that the scene view has been added. At this time, a glasses sticker covering the eyes will be displayed in the view, following your head movements.

Apply lighting effects and 360 background

-

Call

setExtensionPropertyto request the texture and render a scene with lighting effects and 360 background features. ThekeyisrequestTexture, and thevaluecontains the configuration information of the scene. To experience lighting effects and the 360 background feature, setavatarModeto2, which corresponds to lighting effects and 360 background mode. -

Experience lighting effects and 360 background.

-

Lighting effects:

Call

setExtensionPropertyto set up lighting effects. ThekeyissetEffectVideo, and thevaluecontains a series of lighting materials and their corresponding parameter configurations. The MetaKit extension provides lighting effects such as 3D lighting, screen ripples, aurora effects, and portrait edge flames, and supports fine-tuning of parameters such as color, intensity, and range. See the Lighting effects key-value documentation for more details. The example code below demonstrates how to overlay advertising lights on a live video. -

360 background:

Call

setExtensionPropertyto set up a 360 panoramic background. ThekeyissetBGVideo, and thevaluesets the background mode, resource path, and rotation angle.You can also call

setExtensionPropertyto enable the gyroscope, specifykeyassetCameraGyro, and enable the gyroscope function in thevalueto further enhance the interactivity and immersion of the background.After successfully setting this effect, you can see that the video background is replaced with the specified resource, and you can experience the panoramic effect by rotating the phone. For more configurations, see the 360 Background key-value documentation.

-

Release resources

When you are done using the extension, you can follow the sample code below to stop texture requests, unload scene resources, and destroy the engine.

Reference

This section completes the information on this page, or points you to documentation that explains other aspects about this product.

Key-value description

To implement the capabilities of the MetaKit extension, use the setExtensionProperty method provided by the Agora Video SDK v4.x. Pass in the specified key and value as follows:

key: Corresponds to different interfaces of the MetaKit extension.value: Encapsulates some or all of the interface parameters in the JSON format.

This guide explains how to use different key-value pairs to implement the MetaKit extension's virtual human, Animoji, lighting effects, and 360 background function modules.

Basic functions

This section covers how to implement the basic functions of the MetaKit extension, such as initialization, loading scene resources, enabling texture requests, switching texture scene modes, and avatars. Once you have implemented the basic functions, you can explore the specific functional modules.

Set up the Android Activity Context

key:setActivityContextvalue: Object. Contains the following field:activityContext: String. The address of the activity context.

Initialize the engine

key:initializevalue:{}

Load scene resources

key:loadScenevalue: Object. Contains the following fields:sceneInfo: Object. Contains the following field:scenePath: String. The path of the scene asset package, for example,"/sdcard/metaAssets/15".

extraCustomInfo: Object. Contains the following field:sceneIndex: Int. The index of the scene, currently only supports0.

Enable texture request

Request a texture and render the specified scene content on the texture. This includes virtual humans, Animoji, lighting effects, and 360 backgrounds.

key:requestTexturevalue: Object. Contains the following fields:index: Int. Texture index, currently only supports0.enable: Boolean. Whether to enable the texture request.true: Enable;false: Disable (default).config: Object. Contains the following fields:width: Int. The width of the view (px). Set this to the current camera acquisition resolution, the width and height of the screen layout, or a common resolution like 720 × 1280.height: Int. The height of the view (px). Set this to the current camera acquisition resolution, the width and height of the screen layout, or a common resolution like 720 × 1280.extraInfo: Object. Contains the following fields:sceneIndex: (optional) Int. Scene index, currently only supports0.avatarMode: (optional) Int. Scene mode.0: Avatar (default);1: Animoji;2: Light or background.avatar: (optional) String. Avatar or Animoji image. IfavatarModeis0(avatar), set togirlorhuamulan(default isgirl); ifavatarModeis1(Animoji), set todog,girlhead, orarkit(default isdog).

The requestTexture and addSceneView methods can both be used to render a specified scene on TextureView. Agora recommends using requestTexture for better rendering performance and lower latency. The differences are as follows:

requestTexturedoes not require passing the render targetTextureViewto the MetaKit extension; it automatically generates and sends back texture data.addSceneViewrequires manual creation and management ofTextureView.- With

requestTexture, the obtained texture is directly rendered, previewed, encoded by the SDK, and transmitted to the remote end.addSceneViewrequires an additional call toenableSceneVideoto enable scene screen capture. requestTexturesupports a single view;addSceneViewsupports multiple views.- For scene mode or avatar switching, use

requestTextureto request textures andswitchTextureAvatarModefor scene switching. UseaddSceneViewto add scene views andswitchAvatarModeto complete scene switching. - To release scene resources, use

requestTextureand setenabletofalseto stop texture requests. If you added a scene view usingaddSceneView, useremoveSceneViewto remove it.

Switch texture scene

After enabling texture requests, switch the scene mode of the texture view, or the virtual human or Animoji image in the scene.

key:switchTextureAvatarModevalue: Object. Contains the following fields:index: Int. Texture index, currently only supports0.mode: (optional) Int. Scene mode to switch to.0: Avatar;1: Animoji;2: Video capture screen.avatar: (optional) String. Avatar or Animoji to switch to. IfavatarModeis0(avatar), set togirlorhuamulan; ifavatarModeis1(Animoji), set todog,girlhead, orarkit.

Add scene view

Add a MetaKit scene to a native view and render the specified scene content. This includes virtual human, Animoji, lighting effects, and 360 background.

- Supports adding up to 8 scene views.

- Currently, only lighting and background effects for video capture are supported. To enable

backgroundEffect,avatarModemust be set to2.

key:addSceneViewvalue: Object. Contains the following fields:view: Int64. The address handle of the view.config: Object. Contains the following fields:width: (optional) Int. The width of the view (px). Defaults to full screen if not specified.height: (optional) Int. The height of the view (px). Defaults to full screen if not specified.extraInfo: Object. Contains the following fields:sceneIndex: Int. Scene index, currently only supports0.avatarMode: (optional) Int. Scene mode.0: (default) Avatar;1: Animoji;2: Video capture screen.avatar: (optional) String. Avatar or Animoji image. IfavatarModeis0(avatar), set togirlorhuamulan(default isgirl). IfavatarModeis1(Animoji), set todog,girlhead, orarkit(default isdog).backgroundEffect: (optional) Boolean. Enables lighting effects and 360 background functions.true: Enable;false: (default) Disable.

Switch scene view

After adding a scene view, you can switch the scene mode, or the virtual human or Animoji image in the scene.

key:switchAvatarModevalue: Object. Contains the following fields:viewAddress: Int64. The address handle of the view.mode: (optional) Int. Specifies the scene mode to switch to.0: avatar;1: Animoji;2: Video capture screen.avatar: (optional) String. Specifies the avatar or Animoji to switch to. IfavatarModeis0(avatar), set togirlorhuamulan. IfavatarModeis1(Animoji), set todog,girlhead, orarkit.

Enable scene view capture

After enabling scene view capture, call joinChannel to join the channel and publish the video stream of the scene view.

key:enableSceneVideovalue: Object. Contains the following fields:view: Int64. The address handle of the view.enable: (optional) Boolean. Enables scene view capture.true: Enable;false: (default) Disable.

Remove scene view

Remove the MetaKit scene view from view.

key:removeSceneViewvalue: Object. Contains the following field:view: Int64. The address handle of the view.

Unload scene resources

key:unloadScenevalue:{}

Destroy engine

key:destroyvalue:{}

Virtual human

The MetaKit extension allows you to switch the image, viewpoint, face, and outfit of the avatar. To experience the avatar-related functions, set avatarMode to 0 when enabling texture request or adding scene view.

In addition to the existing girl and huamulan avatars, the Agora MetaKit extension provides an open art ecosystem and supports one-click import of avatar models made according to Agora's art standards, providing users with more flexible creation and integration options. Contact Agora technical support to use this feature.

Switch virtual human perspective

key:setCameravalue: Object. Contains the following field:viewMode: Int. The avatar camera view.0: Show the avatar's full body;1: (default) Focus on the avatar's upper body;2: Focus on the avatar's face.

Virtual human face pinching

The MetaKit extension provides a set of face-pinching resources for virtual images.

Currently only the girl avatar supports face pinching.

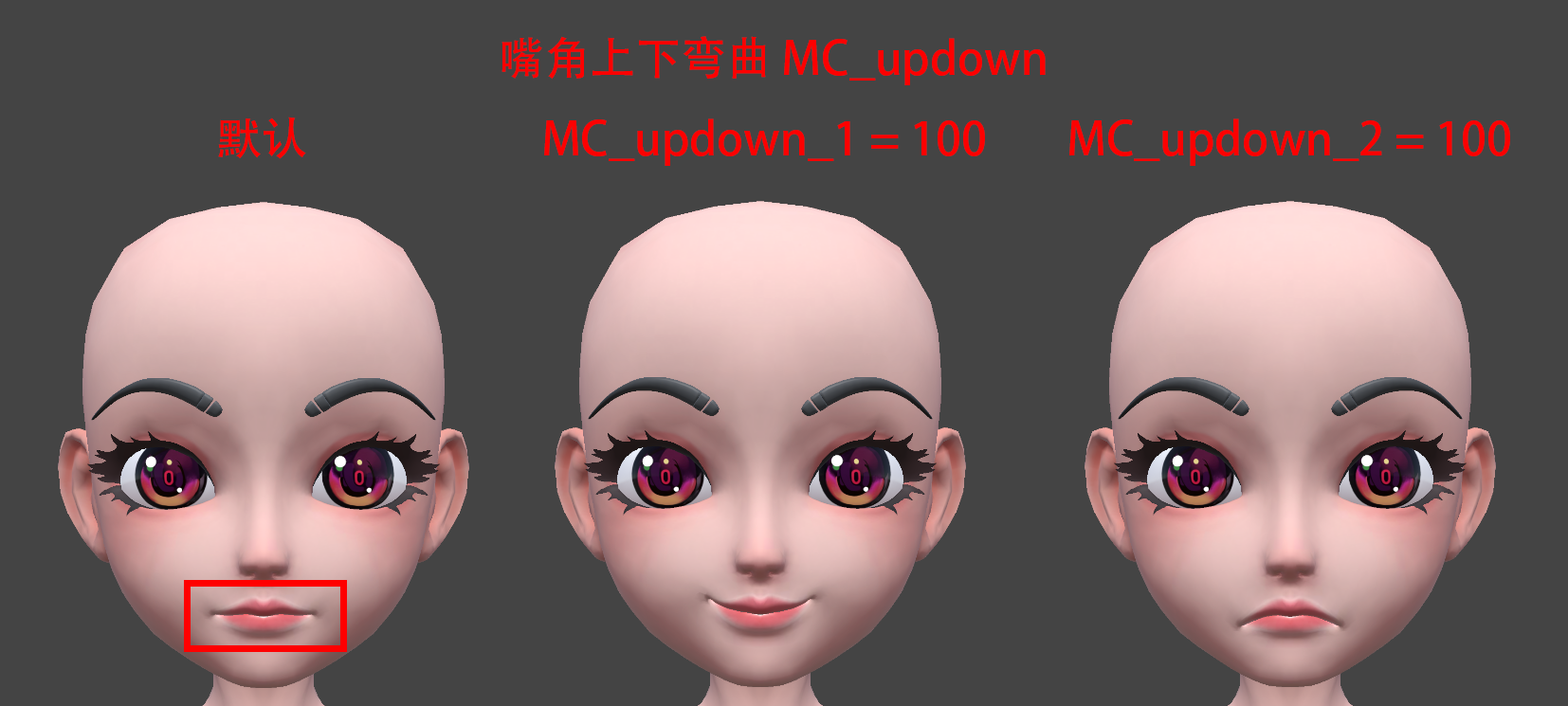

-

key:updateFace -

value: Object. Contains the following fields:key: String. Resource ID, such asMC_updown_1(upward bend of the mouth corner) andMC_updown_2(downward bend of the mouth corner). See Face pinching resources for details.value: Int. Adjustment range, range is [0, 100], default value is 50. Supports passing in multiple sets of face-pinching resource IDs (key) and corresponding adjustment ranges (value) to achieve the final face-pinching effect. The example of settingMC_updown_1andMC_updown_2to 100 respectively is as follows:

Avatar dressup

The MetaKit extension provides a set of dress-up resources for avatars.

Currently only the girl avatar supports dressup.

-

key:updateDress -

value: Object. Contains the following field:id: Int[]. An Int array consisting of resource IDs of multiple clothing items or body parts. Supports dressing operations on multiple items or parts, such as hair, tops, jackets, pants, and so on. Each part provides multiple dressing resources to choose from, that is, each part corresponds to multiple dressing resource IDs. Only one resource can be specified for each part at a time. See Dress-up resources for details.

The recommended set combinations are as follows:

-

Set 1

-

Set 2

Animoji

The MetaKit extension allows you to switch the image of Animoji. To experience Animoji-related functions, set avatarMode to 1 when enabling texture request or adding scene view.

In addition to the existing dog, girlhead and arkit Animoji, the Agora MetaKit extension provides an open art ecosystem and supports one-click import of Animoji images made according to Agora's art standards, providing users with more flexible creation and integration options. Contact Agora technical support to use this feature.

Adjust rendering level

The MetaKit extension provides three rendering levels: Low, medium, and high. You can choose the corresponding rendering level according to the device performance to achieve the best match between device performance and rendering effect.

Currently, only the dog Animoji image supports adjusting the rendering level.

key:setRenderQualityvalue: Object. Contains the following field:general: Int.0: Low configuration;1: (default) Medium configuration;2: High configuration.

Lighting effects

The MetaKit extension provides lighting effects such as 3D lighting, screen ripples, aurora effects, and portrait edge flames, and supports fine-tuning the color, intensity, range, and other parameters of the lighting effects. To experience the lighting effects-related functions, set avatarMode to 2 when enabling texture requests or set backgroundEffect to true when adding a scene view.

Set special effect material

key:setEffectVideovalue: Object. Contains the following fields:id: Int. Special effect material ID.enable: Boolean. Whether to enable the special effect.true: Enable;false: Disable.param: (optional) Object. Each special effect material ID corresponds to a set of configuration parameters, which allows you to fine-tune the color, intensity, range, and so on of the lighting effect. If you do not fill in the parameters, the default parameter configuration will be used.

The mapping relationship between special effect material ID and configuration parameters is as follows:

| ID | Effect | Parameters |

|---|---|---|

1001 | 3D Lighting | - color (Int64): Lighting color. When passing the parameter, the hexadecimal color needs to be converted to an Int64 value. For example, for red, the hexadecimal color is #FF0000, and the converted Int64 value is 16711680.- intensity (Float): Light intensity. The recommended value range is [1.0, 2.0]. The default value is 1.6.- scale (Float): Lighting scale. The recommended range is [0.3, 0.6]. The default value is 0.4. |

1002 | Screen ripples | - color (Int64): Ripple color. When passing parameters, the hexadecimal color needs to be converted to an Int64 value. For example, for red, the hexadecimal color is #FF0000, and the converted Int64 value is 16711680.- speed (Float): Fluctuation speed. The recommended value range is [-0.2, 0.2]. The default value is -0.12.- scale (Float): Ripple size. The recommended value range is [3.0, 6.0]. The default value is 4.0. |

1003 | Aurora | - color (Int64): Aurora color. When passing parameters, the hexadecimal color needs to be converted to an Int64 value. For example, for red, the hexadecimal color is #FF0000, and the converted Int64 value is 16711680.- intensity (Float): Aurora intensity. The recommended value range is [0.8, 1.5]. The default value is 1.0. |

2001 | Portrait edge flame | - color (Int64): Flame color. When passing parameters, the hexadecimal color needs to be converted to an Int64 value. For example, for red, the hexadecimal color is #FF0000, and the converted Int64 value is 16711680.- intensity (Float): Flame intensity. The recommended value range is [0.2, 1.5]. The default value is 0.2. |

3001 | Ambient lighting set | N/A |

3002 | Advertising lights | - startColor (Int64): The initial color of the advertising light. When passing parameters, the hexadecimal color needs to be converted to an Int64 value. For example, for red, the hexadecimal color is #FF0000, and the converted Int64 value is 16711680.- endColor (Int64): The end color of the advertising light. When passing parameters, you need to convert the hexadecimal color into an Int64 value. After configuring the starting color, a gradient effect from the initial color to the ending color will be created.- size (Float): The size of the advertisement light texture. The recommended value range is [8, 15]. The default value is 10.- intensity (Float): Advertising light intensity. The recommended value range is [100, 1000], and the default value is 1000.- range (Float): The distance of the advertising light. The recommended range is [10, 40]. The default value is 15. |

360 Background

The MetaKit extension allows you to enable 360-degree panoramic background mode, customize background replacement resources, and enable the gyroscope function to enhance the interactivity and immersion of the scene background. To experience 360-degree background-related functions, set avatarMode to 2 when enabling texture request or set backgroundEffect to true when adding a scene view.

Set replacement resource

After successful setting, you can observe that the video background is replaced with the specified resource, and you can experience the panoramic effect by rotating the phone.

key:setBGVideovalue: Object. Contains the following fields:mode: String. Set totex360, which means 360-degree panoramic background.param:path: String. Specifies the URL or local path of the background resource.rotation: (optional) Int. Rotation angle, default value is 0.

Enable background gyroscope

The gyroscope function is only supported after successfully setting up a 360-degree panoramic background. Enabling the gyroscope function can further enhance the interactivity and immersion of the background.

key:setCameraGyrovalue: Object. Contains the following field:state: Boolean. Background gyroscope function status.on: Enabled;off: (default) Disabled.

Face-pinching resources

This section introduces the virtual human face-pinching resources provided by the MetaKit extension.

Girl

This section introduces the face-shaping resources for girl.

Face

An example of lifting (CK_raise_1) and lowering (CK_raise_2) the cheeks is shown in the following video:

The girl resource supports face-pinching operations on the following parts of the face:

| Resource ID | Location |

|---|---|

| FE_raise_1 | Forehead protrusion |

| FE_raise_2 | Forehead collapse |

| TP_raise_1 | Temple protrusion |

| TP_raise_2 | Temple collapse |

| CK_raise_1 | Cheek raise |

| CK_raise_2 | Cheek collapse |

| MD_width_1 | Mandible outward |

| MD_width_2 | Mandible inward |

| MD_updown_1 | Mandible up and down |

| MD_updown_2 | Mandible up |

| C_width_1 | Chin stretch (left and right) |

| C_width_2 | Chin tightening (left and right) |

| C_updown_1 | Chin stretch |

| C_updown_2 | Chin stretch |

Eyebrow

The following are examples of adjusting the eyebrows to be longer (EB_length_1) and shorter (EB_length_2):

The girl resource supports face-pinching operations on the following parts of the eyebrows:

| Resource ID | Location |

|---|---|

| EB_width_1 | Eyebrows moved inwards |

| EB_width_2 | Eyebrows moved outwards |

| EB_updown_1 | Eyebrows moved down |

| EB_updown_2 | Eyebrows moved up |

| EB_thickness | Adjust the thickness of eyebrows |

| EBIN_updown_1 | Inner eyebrow moved up |

| EBIN_updown_2 | Inner eyebrow moved down |

| EBMID_updown_1 | Middle eyebrow moved up |

| EBMID_updown_2 | Middle eyebrow moved down |

| EB_length_1 | Adjust eyebrow length |

| EB_length_2 | Adjust eyebrow length |

| EBOUT_updown_1 | Outer eyebrow high position |

| EBOUT_updown_2 | Outer eyebrow low position |

Eye

The following are examples of adjusting the overall enlargement (E_size_1) and shrinking of the eyes (E_size_2):

The girl resource supports face-pinching operations on the following parts of the eyes:

| Resource ID | Location |

|---|---|

| E_width_1 | Eyes inward |

| E_width_2 | Eyes outward |

| E_updown_1 | Eyes up adjustment |

| E_updown_2 | Eyes down adjustment |

| IC_width_1 | Inner corner of eye facing inward |

| IC_width_2 | Inner corner of eye facing outward |

| IC_updown_1 | Inner corner of eye upward |

| IC_updown_2 | Inner corner of eye downward |

| UEIN_updown_1 | Upper eyelid tip up |

| UEIN_updown_2 | Upper eyelid tip down |

| UE_updown_1 | Upper eyelid upwards |

| UE_updown_2 | Upper eyelid downwards |

| UEOUT_updown_1 | Upper eyelid ends upward |

| UEOUT_updown_2 | Upper eyelid ends downward |

| LE_updown_1 | Lower eyelid downwards |

| LE_updown_2 | Lower eyelid upwards |

| OC_width_1 | Outer corner of eye inward |

| OC_width_2 | Outer corner of eye outward |

| OC_updown_1 | Outer corner of eye upward |

| OC_updown_2 | Outer corner of eye downward |

| E_rotate_1 | Eye rotation 1 |

| E_rotate_2 | Eye rotation 2 |

| E_size_1 | Enlarge the entire eye |

| E_size_2 | Reduce the entire eye size |

| EL_updown_1 | Eyelids wider |

| EL_updown_2 | Eyelids narrower |

Nose

The following are examples of adjusting the overall enlargement (NT_size_1) and shrinking (NT_size_2) of the nose tip:

The girl resource supports face-pinching operations on the following parts of the nose:

| Resource ID | Location |

|---|---|

| N_width_1 | Enlarge the nose (left and right) |

| N_width_2 | Shrink the nose (left and right) |

| N_updown_1 | Nose up |

| N_updown_2 | Nose down |

| NB_raise_1 | Nose raised |

| NB_raise_2 | Nose bridge concave |

| NT_size_1 | Enlarge nose tip |

| NT_size_2 | Shrink nose tip |

| NW_width_1 | Nose wings outward |

| NW_width_2 | Nose wings inward |

| NW_updown_1 | Nose wings upward |

| NW_updown_2 | Nose wings downward |

Mouth

The following are examples of adjusting the mouth to move down (M_updown_1) and up (M_updown_2):

The girl resource supports face-pinching operations on the following parts of the mouth:

| Resource ID | Location |

|---|---|

| UL_width_1 | Wider upper lip |

| UL_width_2 | Narrower upper lip |

| LL_width_1 | Wider lower lip |

| LL_width_2 | Narrower lower lip |

| MC_updown_1 | Mouth corners curved upward |

| MC_updown_2 | Mouth corners curved downward |

| M_size_1 | Enlarge the mouth (left and right) |

| M_size_2 | Shrink the mouth (left and right) |

| M_updown_1 | Mouth downward |

| M_updown_2 | Mouth upward |

JSON example

The complete face-shaping JSON is as follows:

Dress-up resources

This section introduces the virtual human dress-up resources provided by the MetaKit extension.

Girl

The parts of the girl's outfit and their corresponding resource IDs are as follows:

| Clothing item/Body part | Resource ID |

|---|---|

| Hair | 10000, 10001, 10002 |

| Eyebrows | 10100, 10101, 10102 |

| Blush | 10401, 10402 |

| Headdress | 10801 |

| Tops and jackets | 12100, 12101, 12102 |

| Gloves | 12501 |

| Pants | 14100, 14101, 14102 |

| Socks | 14301 |

| Shoes | 15000, 15001, 15002 |

JSON example

The complete JSON for the replacement is as follows: